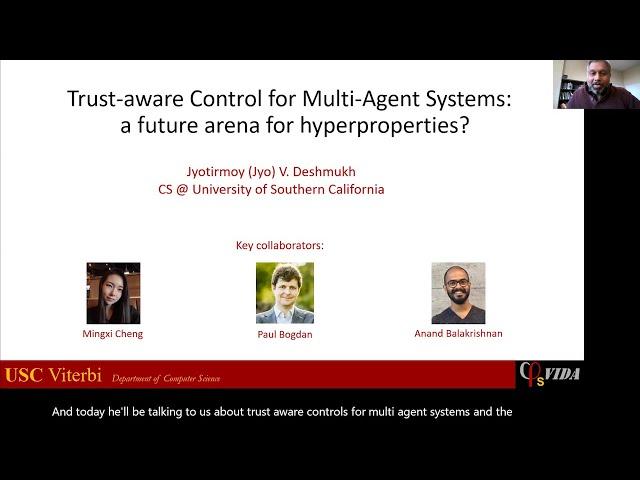

HYPER 2021: Jyotirmoy V. Deshmukh on Trust-Aware Control for Multi-Agent Systems

Trust-Aware Control for Multi-Agent Systems: a Future Arena for Hyperproperties?: an invited talk by Jyotirmoy V. Deshmukh (USC) at the Workshop on Hyperproperties: Advances in Theory and Practice (HYPER 2021) at ATVA 2021.

Autonomous multi-agent systems often operate in uncertain environments. The safety and performance of such systems is often dependent on their ability to coordinate the actions of individual agents. Many algorithms either optimistically view all participating agents as fully trustworthy, or provide theoretical limits under which the system can achieve fault tolerance (under pessimistic assumptions of agent failure). Many real world systems in contrast consist of agents who demonstrate behavior trends, i.e. certain agents are more likely to demonstrate reliable behavior, while other agents are demonstrably unreliable. Untrustworthiness of agents can be both due to benign failures in agents, malicious agents, or sporadic and adverse environment conditions. What are the precise and quantitative notions of trust we can introduce in such agents (and their environments)? How can we quantify the trustworthiness of individual agents using only observations of their behaviors? Furthermore, can we use trustworthiness to regulate system behavior to achieve better safety or performance guarantees? In this talk, we give tentative answers to these questions by introducing a notion of trust (inspired by an epistemic logic), a method to quantify trustworthiness, and trust-aware control methods. We observe that our notion of trustworthiness is a hyperproperty (in a statistical sense). We will formulate some open questions on exploring the connection between hyperproperties and our notion of trust, and the related verification and synthesis problems for achieving trustworthy multi-agent systems.

Autonomous multi-agent systems often operate in uncertain environments. The safety and performance of such systems is often dependent on their ability to coordinate the actions of individual agents. Many algorithms either optimistically view all participating agents as fully trustworthy, or provide theoretical limits under which the system can achieve fault tolerance (under pessimistic assumptions of agent failure). Many real world systems in contrast consist of agents who demonstrate behavior trends, i.e. certain agents are more likely to demonstrate reliable behavior, while other agents are demonstrably unreliable. Untrustworthiness of agents can be both due to benign failures in agents, malicious agents, or sporadic and adverse environment conditions. What are the precise and quantitative notions of trust we can introduce in such agents (and their environments)? How can we quantify the trustworthiness of individual agents using only observations of their behaviors? Furthermore, can we use trustworthiness to regulate system behavior to achieve better safety or performance guarantees? In this talk, we give tentative answers to these questions by introducing a notion of trust (inspired by an epistemic logic), a method to quantify trustworthiness, and trust-aware control methods. We observe that our notion of trustworthiness is a hyperproperty (in a statistical sense). We will formulate some open questions on exploring the connection between hyperproperties and our notion of trust, and the related verification and synthesis problems for achieving trustworthy multi-agent systems.

Комментарии:

Svet ključa – hoće li se pre raspasti Ukrajina ili NATO

Povratak zabranjenih

7 Fotografie Fehler die ich selbst mache

Stephan Wiesner

Timo 'Magic' Boll at his very best, #DankeTimoBoll 🫡

World Table Tennis

US Recruiter Interview Question and Answers

Sunitha@Recruiter

Gold Rate Today, 26 December 2024 Aaj Ka Sone Ka Bhav | Sone Ka Bhav | Today Gold Rate

Sone Chandi Ke Bhav Live

£2000 First Car Challenge (Car + Insurance + Tax)

Car Throttle

블랙잭#라이브#송파#김사장님#가즈~~아!!

캄보디아TV플러스2