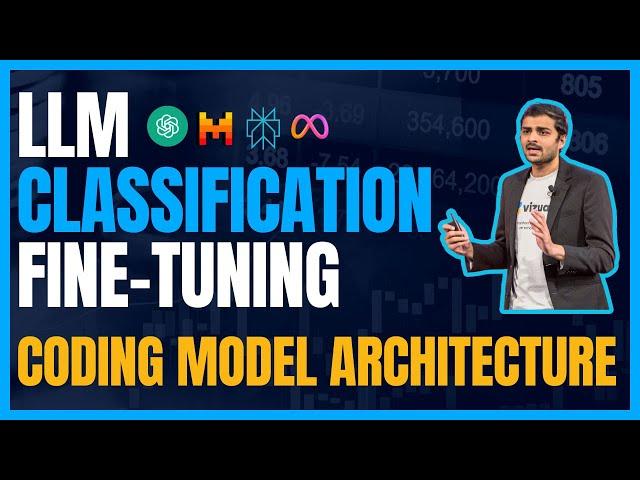

Coding the model architecture for LLM classification fine-tuning

In this lecture, we build the model architecture for the classification fine-tuning project.

We modify the original LLM architecture by adding a classification head to the architecture design.

We also load OpenAI GPT-2 pretrained weights into our modified model architecture.

0:00 Recap of classification fine-tuning so far

6:06 Loading OpenAI GPT-2 pretrained weights

16:13 Adding classification head to model architecture

20:07 Select layers which want to fine-tune

25:01 Coding the finetuning architecture

31:44 Extracting last token output

33:12 Next steps

Code file: https://drive.google.com/file/d/1oXbv0T9t74pEXejQ3o7w9ZABGLjHmFsu/view?usp=sharing

Spam vs No-Spam dataset:

https://archive.ics.uci.edu/dataset/228/sms+spam+collection

=================================================

✉️ Join our FREE Newsletter: https://vizuara.ai/our-newsletter/

=================================================

Vizuara philosophy:

As we learn AI/ML/DL the material, we will share thoughts on what is actually useful in industry and what has become irrelevant. We will also share a lot of information on which subject contains open areas of research. Interested students can also start their research journey there.

Students who are confused or stuck in their ML journey, maybe courses and offline videos are not inspiring enough. What might inspire you is if you see someone else learning and implementing machine learning from scratch.

No cost. No hidden charges. Pure old school teaching and learning.

=================================================

🌟 Meet Our Team: 🌟

🎓 Dr. Raj Dandekar (MIT PhD, IIT Madras department topper)

🔗 LinkedIn: https://www.linkedin.com/in/raj-abhijit-dandekar-67a33118a/

🎓 Dr. Rajat Dandekar (Purdue PhD, IIT Madras department gold medalist)

🔗 LinkedIn: https://www.linkedin.com/in/rajat-dandekar-901324b1/

🎓 Dr. Sreedath Panat (MIT PhD, IIT Madras department gold medalist)

🔗 LinkedIn: https://www.linkedin.com/in/sreedath-panat-8a03b69a/

🎓 Sahil Pocker (Machine Learning Engineer at Vizuara)

🔗 LinkedIn: https://www.linkedin.com/in/sahil-p-a7a30a8b/

🎓 Abhijeet Singh (Software Developer at Vizuara, GSOC 24, SOB 23)

🔗 LinkedIn: https://www.linkedin.com/in/abhijeet-singh-9a1881192/

🎓 Sourav Jana (Software Developer at Vizuara)

🔗 LinkedIn: https://www.linkedin.com/in/souravjana131/

We modify the original LLM architecture by adding a classification head to the architecture design.

We also load OpenAI GPT-2 pretrained weights into our modified model architecture.

0:00 Recap of classification fine-tuning so far

6:06 Loading OpenAI GPT-2 pretrained weights

16:13 Adding classification head to model architecture

20:07 Select layers which want to fine-tune

25:01 Coding the finetuning architecture

31:44 Extracting last token output

33:12 Next steps

Code file: https://drive.google.com/file/d/1oXbv0T9t74pEXejQ3o7w9ZABGLjHmFsu/view?usp=sharing

Spam vs No-Spam dataset:

https://archive.ics.uci.edu/dataset/228/sms+spam+collection

=================================================

✉️ Join our FREE Newsletter: https://vizuara.ai/our-newsletter/

=================================================

Vizuara philosophy:

As we learn AI/ML/DL the material, we will share thoughts on what is actually useful in industry and what has become irrelevant. We will also share a lot of information on which subject contains open areas of research. Interested students can also start their research journey there.

Students who are confused or stuck in their ML journey, maybe courses and offline videos are not inspiring enough. What might inspire you is if you see someone else learning and implementing machine learning from scratch.

No cost. No hidden charges. Pure old school teaching and learning.

=================================================

🌟 Meet Our Team: 🌟

🎓 Dr. Raj Dandekar (MIT PhD, IIT Madras department topper)

🔗 LinkedIn: https://www.linkedin.com/in/raj-abhijit-dandekar-67a33118a/

🎓 Dr. Rajat Dandekar (Purdue PhD, IIT Madras department gold medalist)

🔗 LinkedIn: https://www.linkedin.com/in/rajat-dandekar-901324b1/

🎓 Dr. Sreedath Panat (MIT PhD, IIT Madras department gold medalist)

🔗 LinkedIn: https://www.linkedin.com/in/sreedath-panat-8a03b69a/

🎓 Sahil Pocker (Machine Learning Engineer at Vizuara)

🔗 LinkedIn: https://www.linkedin.com/in/sahil-p-a7a30a8b/

🎓 Abhijeet Singh (Software Developer at Vizuara, GSOC 24, SOB 23)

🔗 LinkedIn: https://www.linkedin.com/in/abhijeet-singh-9a1881192/

🎓 Sourav Jana (Software Developer at Vizuara)

🔗 LinkedIn: https://www.linkedin.com/in/souravjana131/

Комментарии:

Квартира в Анталии, или как получить ВНЖ в Турции. Недвижимость в 2024 -25 году

Mr. IMEN | Зарубежная Недвижимость

Building a DIY Robot Kit - TECHING Robot Be your friend

kota scale model

Sahara: Cycing through the desert +48°C - Lorenzo Barone

Lorenzo Barone